Intraoperative AR

Augmented Reality for Endoscopic Transsphenoidal Surgery

The integration of augmented reality (AR) offers promising potential for improving surgical navigation and guidance in endoscopic transsphenoidal pituitary surgeries (ETS). This study evaluates the design and interaction factors of contour style, highlight style, and input modality of the AR visualisations through assessment of an interactive prototype.

The research methodology encompassed planning, prototyping, and user evaluation. A user task flow was constructed to delineate the interactive requirements for the AR overlay as a foundation for the prototype design. The design phase entailed the creation of rudimentary sketches and intricate 3D models of critical anatomical structures. C# scripts facilitated user interaction with the prototype via pedal inputs.

Role

Entirety

Tags

augmented reality, intraoperative AR, medicine, human factors, Unity 3D, C#

Use Case

MSc Dissertation

Time Constraint

10 Weeks

Endoscopic Transsphenoidal Surgery: Procedure flow and user needs

Endoscopic transsphenoidal surgery (ETS) is a minimally invasive neurosurgical procedure performed to access and treat certain conditions within the skull base, particularly in the region of the pituitary gland and surrounding structures – such as a macroadenoma resection.

The ETS procedure consists of four main surgical phases consisting of the nasal phase, sphenoid phase, sellar phase, and closure phase. Beginning with the nasal phase, the surgeon creates a small incision in the back of the nasal cavity or within the nostril, identifying pertinent nasal anatomy until entry into the sphenoid sinus. The endoscope is then carefully guided through the sphenoid sinus, providing a direct visual examination of the surgical site on a monitor.

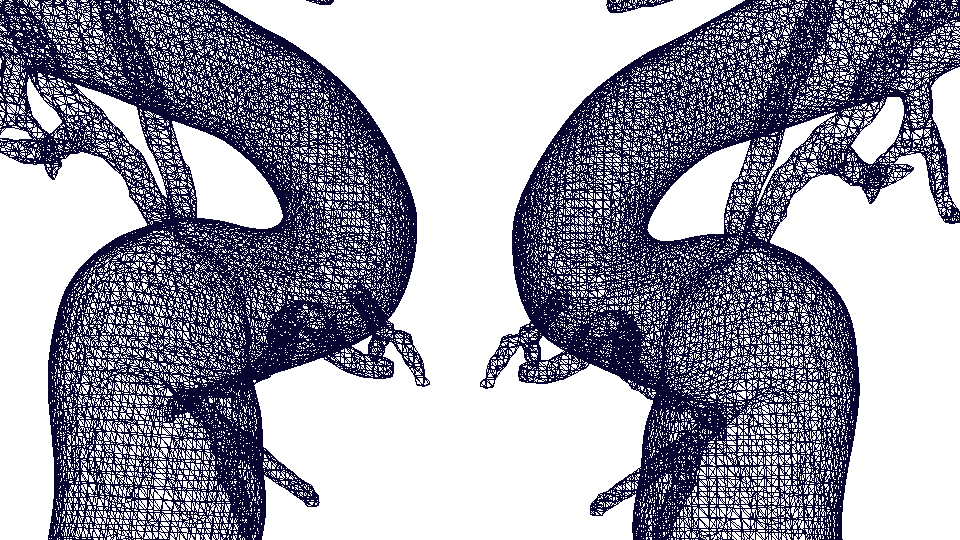

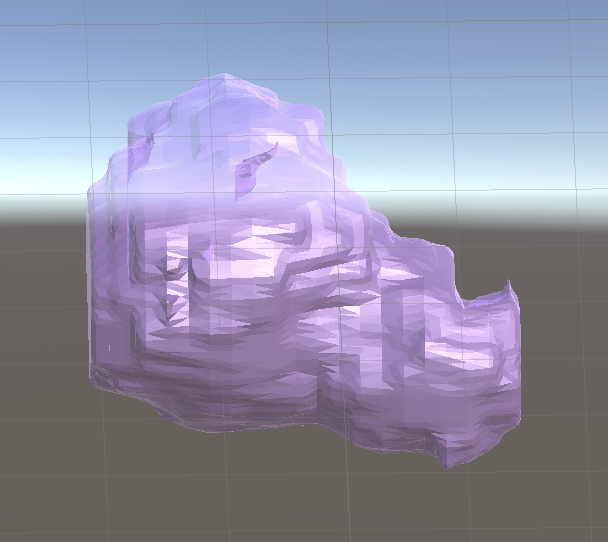

3D Models of Anatomical Structures

Based on an observational and qualitative user requirements gathering by colleague, Sonia Nkatha, and the existing literature, it was found that the critical underlying anatomical structures that would be important to visualize in the AR overlay are: the optic nerve, carotid arteries, and tumour. All three structures were acquired through patient segmentations defined using ITK-SNAP and converted into the 3D object format (.OBJ). These segmentation files were acquired from the Queen’s Square Institute of Neurology database. These 3D models were then imported into Blender, an open source 3D modelling software, and converted into mesh wireframe structures using a ‘Wireframe’ modifier for the mesh contour style condition. The mesh wireframes’ thickness and density were adjusted to ensure a lean design, which was determined to be desirable for the wireframe contours (see Figure 6). The solid structures and the mesh structures were imported into Unity where the transparent color materials were applied. A paler, translucent color palette was chosen to reduce distraction and potential cause for inattentional blindness.

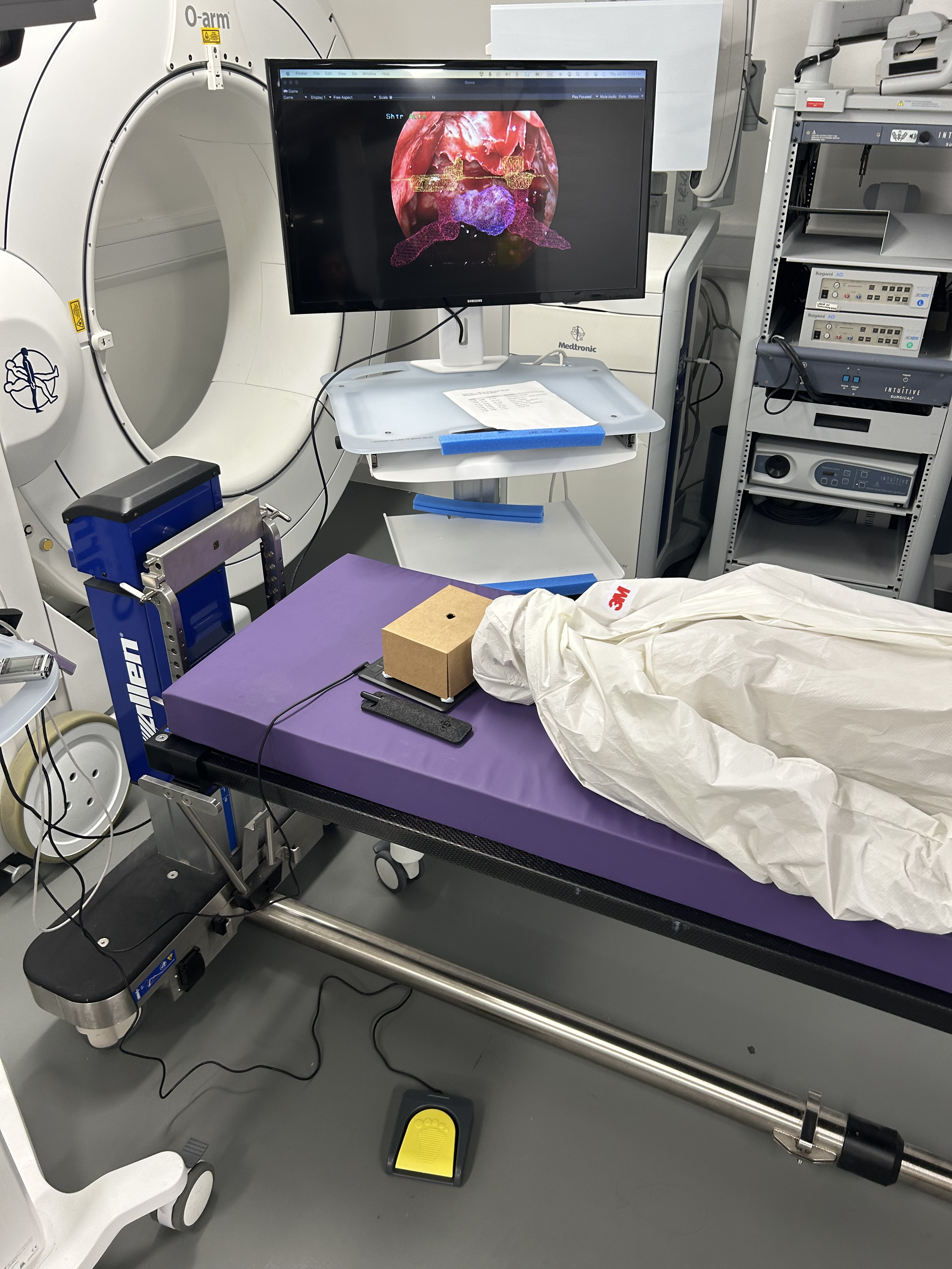

Building Virtual Environment in Unity 3D

To begin building out the AR scenario, all the materials were imported into a Unity scene and configured with the correct scripts for user interactivity. The objects were grouped and placed in the object hierarchy for easy manipulation during the evaluation study.

A canvas was created and texturized with the endoscopic 2D image as the main site of interaction. To control for distraction and third variable issues, we opted to map the screen with a static image of the endoscopic view captured from a patient video (anonymized and used with consent). We then modeled the AR environment to simulate the endoscopic projection itself, which we would then connect to a real-life monitor. This monitor would be connected to a GAOMON drawing tablet, which was used for the primary user navigation task during the evaluations.

The 3D objects were scaled, rotated, and translated to fit onto the background endoscopic image as close to the authentic representation of the anatomy as possible. Every combination of contour style and highlight style were adjusted so that the 3D objects differed slightly in size and location; this was done to reduce any learning effects on the user’s rating of the usefulness of the AR overlays. The grouped anatomical objects were then sandwiched between the background image and drawing board all within the “Drawing Canvas” object. A Unity camera object was placed directly in front of the canvas to capture the entire screen. The camera was configured to render a black background behind any 3D objects so that the screen would look like a true endoscope imaging monitor when the scene was played.

Programming Interaction

Pedal Interaction

The pedal interactions were created through a simple C# script that was attached to the 3D anatomical structures. Each set of objects (tumour, carotid arteries, optic nerves) were grouped into a parent object to which the script was attached. This script manoeuvred the pedal behaviour through a series of If-Else statements and For-Loops, which were determined by the highlight style inputs and toggle style Boolean values. The pedal was then connected to a driver, ElfKey, to link the pressing of the pedal to a hot key on the keyboard. In this case, the spacebar was chosen as the associated hot key. Using the new Unity Input System package, the first if statement evaluates whether the foot pedal is pressed, then the script then evaluates whether or not the user had entered ‘Toggle’, ‘Color’, or ‘Opacity’ as the ‘Highlight style’ String input. For each of these conditions, a new function was built out and called within the main body.

Drawing Task

The second interaction that needed to be built was the primary navigational task. The task of the study was designed to emulate similar occlusion, cognitive load and reliance on the endoscopic projections that are seen during the removal of the sellar wall, identified as the sellotomy in the Sellar phase by Dr. Hani Marcus. This sellotomy is performed once the surgeon has reached the skull base and is preparing for the tumour excision. This is done with the endoscope fixed in position as the doctor will no longer need to traverse through the nasal passage. An asset code base was used to create an overlaying drawing canvas where the user would be able to draw or outline the area of interest where they plan to perform the sellar removal. It is important to emphasize that this task is not an exact simulation of the sellotomy but rather a task that would allow our participants to be taken into a context in which they had to cognitively engage with, and interact with, the AR overlays in order to complete the navigational task.

User Evaluations

4 neurosurgical experts were recruited for the purposes of this study. Participants were recruited from a hospital through network of surgical innovation fellows. The study was conducted with the target users, with emphasis on a user-centered approach to refining the design guidelines for intraoperative ETS AR visualisations. All interviews were conducted in English. All participants were familiar with, or have participated in, real-life endoscopic transsphenoidal pituitary surgeries.

The participants were told that objective of the task is to expose the tumour hile avoiding the critical structures. It was emphasized that the motion to outline the area does not mimic the motion a neurosurgeon would use when removing the sellar wall, but rather to replicate doing a task where one would rely on the endoscopic projection to help with navigation.

The participant was then told that the highlight style would be controlled by the foot pedal, encouraged to interact with the foot pedal and familiarize themselves before beginning the task evaluations. The location of each critical structure was made to differ slightly between condition so that the participant would use the AR overlays to help guide them equally for each scenario. However, for all scenarios, they were asked to assume that the overlays are accurately located according to the patient’s pre-op scans.

To begin part one, the participant was asked to use the pedal to toggle/highlight the overlays. While they used the pen and tablet to outline the area of interest, they were asked to voice their thoughts in a “think-aloud” format. The researcher also prompted feedback through questions about their initial impression of the contour style, the distraction of the contour style/highlight style, how they would improve the particular contour style/highlight style, etc. This process was repeated for all 8 design combinations.

After concluding the first part, the participant was asked to rank the 8 [contour x highlight] style combinations in order of most to least favourable. They were then asked questions about why they chose the rankings and how they would improve the most favourable condition if they were able to adjust it.

They were then asked to continue onto part two, which was essentially the same task but focused on the pedal interaction. This concluded the user evaluation study.

Results + AR Design Guidelines

AR must not obscure patient anatomy. A lean mesh contour style was most appreciated as it allowed the surgeon to see “through” the AR overlays and confirm the AR with the patient anatomy (as the grounded truth). User trust in the AR is still weaker than human expertise and being able to see the patient anatomy (or even turn off the AR completely) is crucial.

AR must have clear + distinct boundaries. Although a lean mesh is the most desired contour style due to the ability to see the patient anatomy, the actual boundaries of the 3D structures should be distinct, whether that is controlled by thickness, brightness, or color selection. This will reduce any strain or stress put on the user.

Color choices for the AR visualisations must be intuitive. Should reflect academic textbook color choices— for example, “oxygenated” blood or arteries should be red vs. “non-oxygenated” blood or veins should be blue.

Interaction between structures are important to see. Must not only display a single structure at a time, but rather be able to see where they are in relation to each other and how they interact.

Reflections

— Musing N°1

Even if a lot of resources are invested into building out an AR system, you must consider systemic constraints and willingness to adopt. Therefore, stakeholder buy-in becomes even more important, and will be more likely if you involve the end users in the design process. The neurosurgeons who were involved were very excited about this potential new technology, and the prospect of being pioneers within their field. It is vital to have these initial users act as ambassadors in order to ensure that the technology properly benefits and improve the experience for the clinicians. USER-centered design!

— Musing N°2

Building out an augmented reality prototype in Unity 3D for a 2D display may not be where advanced AR technology is the most appreciated. When discussing with some AR experts regarding the future of spatial computing, it has been emphasized that we must approach AR as a tool to expand beyond what we can feasibly do in 2D space. Therefore, future work should definitely explore if displaying these 3D models on a 2D endoscopic monitor even makes sense.

— Musing N°3

This led me to be curious about computer vision + am currently researching how to build a testbed for a foundation AI model with Dr. Mobarakol Islam. This research project explores how various image corruptions may impact results of an image recognition model and how we could quantitatively measure the reliability of various AI models.